<The> Artificial Intelligence

<The> Intelligence <has> created great temptation for <to> the researcher who wants to know the secret of the universal. Researchers hoped to build artificial intelligence on the computer to simulate <the> real intelligence. However, Different researchers have different definitions of artificial Intelligence (AI). From ‘Artificial Intelligence system is the study of how to make computers do things at which, at the moment, people are better’ by Rich [1] to ‘An intelligence system is one whose expected utility is the highest that can be achieved by any system with the same computational limitations’ by Russell [2], numerous researchers have contributed to the field of artificial intelligence. This brief history of this field will be reviewed in this essay.

智能對于想探索宇宙奧秘的研究者擁有巨大的誘惑力. 研究者希望在計算機算建立人工智能以模仿真實的智能. 但是, 不同的研究者對於人工智能有不同的定義, Rich在1983年定義為: 人工智能是研究如何讓計算機做在現階段人做的更好的事情[1]. Russell定義為智能系統是相同的計算局限下, 系統可以獲得最大的功效[2]. 無數研究者對人工智能做出了貢獻, 本文將簡單描述人工智能研究的歷史.

With the idea ‘The world can be represented as a physical symbol system’, the traditional AI researchers used the symbol system to simulate the relationship in the world. For instance, If A is B’s parent, then B is A’s child. This can be represented as (equal (parent A B) (Child B A)) in lisp.

整個世界可以表示為一個物理符號系統,帶著這樣的想法,早期的研究者使用符號系統來表示世界上存在的關係,例如,如果A是B的parent, B就是A的child,這個在lisp中就可以表示為(equal (parent A B) (child B A))。

The traditional AI researchers wanted to have ‘an explanation of can, cause, and knows in terms of a representation of the world by a system of interacting automata’ [6]. The researchers hoped the programs would have common sense, which would means the computer becoming <became> a ‘Logic Theory Machine’ [5]. The AI researchers need to know the structure of human’s knowledge first: what is knowledge? How to represent knowledge? The problems need to be solved by Philosophy. However, like Wittgenstein has written, Logic cannot deduce any new information; knowledge is too complicated to represent. This approach <has> lost favor over <last> the three decades after it begin.

傳統AI研究者希望擁有”通過自動影響機器來用 ‘能夠’, ‘原因’, 和 ‘知道’的解釋來表示真實世界”[6]. 研究者希望程序能夠擁有’常識’, 也就是說, 計算機成為’邏輯理論機器’[5]. AI研究者首先需要知道人類知識的結構: 什么是知識? 怎樣表示知識? 這些問題需要哲學來解決. 但是,如同維特根斯坦所言:邏輯不能演繹出新的知識。知識本身過於複雜,難以被符號表示。在持續了三十年後,這種研究方法逐漸淡化。

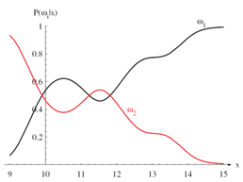

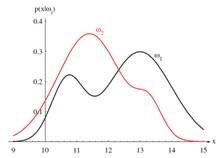

Modern AI research was termed machine learning, which was based on <the> probability and statistical learning. Machine Learning is concerned with the design and development of algorithms that allow computers to improve their performance over time based on data, such as from sensor data or databases. Machine Learning divided into supervised Learning and unsupervised Learning <depend>depending on whether it has the measurement of the outcome or not. Machine Learning <Choosing> chooses the features of data, then <classifying> classifies or regressing the data to one or two dimensional space. Through the analysis of training data, a model can be found to predict behaviors of new objects and describe group tendency. The problem was first represented in high dimensional space, and then dimensions <was reduces> were reduce after mathematical transformation. However, these advantages are not without drawback. It is difficult to find the proper mathematical transformation function.

現代人工智能研究被稱為機器學習,其基礎是概率和統計學習. 機器學習在于設計和發展算法譲計算機通過在長時間處理來自傳感器或者數據庫的數據,以增強性能. 根據輸出有無衡量目標, 機器學習可以分為有監督學習和無監督學習. 機器學習選擇數據的特征, 將數據分類或回歸到一維或二維空間. 通過對訓練集的分析,可以建立一個模型來分析新的對象的行為或整個群體的趨勢。問題先被表述在高維空間,在經過數學變換後,再降低維度。但是,優點往往也是缺點,這種方法的困難之處是難以找到適合的數學變換方程。

The researchers also are learning from the real world and society. This approach of AI research is termed computational intelligence. The neural network (NN) learning from the human brain, the genetic algorithm (GA) simulating human evolution, the swarm intelligence learning (SI) from ants or birds; to sum up, computational research learning from natural life. For example, The Particle swarm Optimization (PSO) is <one> a type of the swarm intelligence. It was <firstly> first introduced by Eberhart and Kennedy in 1995 [3, 4]. The PSO was first designed to simulate birds seeking food which is defined as a “Cornfield vector”. The bird would <found> find food through social cooperation with other birds around it (within its neighborhood). In other words, when a flock of birds go to seek some food, the single bird<, when a flock of birds going to seek some food,> <did> does not know the entire group’s position. The bird knows only a few of bird’s <position> positions which around it. This bird’s position changed quickly, and it was determined by its neighborhood’s position. Every bird goes <going> to find their <his> local best position, then the entire group finds the global best. However, this approach also has <have> its drawback, insufficient mathematical analysis is the deficiency of this approach.

智能研究者也模仿真實世界和人類社會,這種研究思路稱為計算智能。神經網絡學習人腦細胞運作,遺傳算法模擬人類進化,群體智能從螞蟻或鳥群中學習;總而言之,計算智能從自然生物中學習。例如, 粒子群優化算法就是一種群智能.這種方法在1995年首次由Eberhart 和Kennedy介紹[3, 4].粒子群優化最早被設計用來模擬鳥群尋找食物的行為, 這被稱為’麥田向量’. 鳥通過與它周圍的鳥群的社會合作來尋找食物. 換而言之, 單一的小鳥, 在一群鳥都尋找食物時,并不知道整個群體的位置.這只鳥只知道它周圍鳥群的位置, 這只鳥迅速變換它的位置,這些鳥都去尋找它們的單個最優位置,整個群體得到他們的群體最優位置.但是這種方式也有缺陷, 缺乏數學分析是這種方法的不足之處。

Different problems need different methods, like the ‘no free lunch theory’ suggests. Further research is needed in the AI field to determine good solutions for problems and represent the physical world.

如‘no free lunch theory’所證明的,不同的問題需要不同的解決辦法。在人工智能領域,取得問題的最優解,使用智能來表示物理世界,還需要進一步的研究。

References

[1] Rich, E. (1983). Artificial Intelligence. New York: McGraw-Hill

[2] Fogel, David B. Evolutionary Computation. IEEE Press

[3] Eberhart, R. C., Kennedy, J. (1995). A New Optimizer Using Particle Swarm Theory. Proc. Sixth International Symposium on Micro Machine and Human Science (Nagoya, Japan), IEEE Service Center, Piscataway, NJ, 39-43

[4] Eberhart, R. C., Kennedy, J. (1995). Particle Swarm Optimization. Proc. IEEE International Conference on Neural Networks (Perth, Australia), IEEE Service Center, Piscataway, NJ, pp. IV: 1942 – 1948

[5] John McCarthy. Programs with Common Sense.

[6] John McCarthy, Patrick J. Hayes. Some Philosophical Problems from the standpoint of Artificial Intelligence.